We probably wouldn’t be doing what we do if Steve Jobs hadn’t done what he did.

To mark his passing this week, I asked the studio for their stories of first contact with Apple.

Kari:

In 1983 when I was 10 years old, my school got about 10 shiny new Apple IIe computers and decided that we should learn a little basic programming. They divided us into two groups. Half of the class learned Basic, and the other half – the group that I was in – learned Logo. I remember feeling like we were pretty important and very cool for learning how to do computer programming. And I loved that I could change a few letters and number and make the turtle do different things. It gave me a great sense of autonomy.

Alex:

The thing about buying an apple product is that you’re sold an experience. It’s not all about the industrial design of the product, or the UI, or anything else, it’s the russian doll effect of unpeeling layers of a sealed box and feeling like you’ve bought something really special, which is something I’d never really experienced with a consumer product until I bought my first iBook at uni. If you can make people smile before they’ve even lifted the product out of the box, I think you’re almost half way there.

Denise:

My first introduction to the word of computing was at home. We had a commodore PET and graduated to the BBC Micro. I have only one sibling, so aside from a few fights over who got to play space invaders next, we were lucky enough to be able to use a computer when we wanted to. It was never a big deal, there was no fear involved for six year old me.

Two things changed this. First, the BBC moved from the front room to my father’s study. Home computing was work computing, not a play thing. Second, we started ‘doing computers’ at school. This meant looking over the shoulders of 30 other kids at the one, perhaps two, computers available. It meant a fight to get to the front—a fight I wasn’t that fussed about joining. (I’d already seen a computer anyway).

And so I didn’t touch a computer again until university in the early 90s, by which time I’d learnt to fear them. We weren’t trained in ‘desktop publishing’ on my degree course. Yet again, scheduling educational computer time involved a bit of a fight, and so I squared up to a Mac at last, and wondered how the hell I was going to get the file I’d just made off the desktop and on to a disk.

After a split second’s thought, I picked up the picture of the file and put it on the picture of a disk. Seemed obvious. Was obvious. Worked. Turned out that using computers was really easy.

Better than that, it meant that when I said yes to my first design job as a penniless graduate, was handed a magazine to design and a deadline for the end of the week I had a tool to use. Not quite as easy as using a pencil, but not so far off.

At the end of the month, I got paid. Thanks Steve, for feeding me.

Joe:

One of my first encounters with a Mac was back in 1996 during a High School ‘Design & Technology’ lesson. I think it was a Macintosh LC 580. Anyway, it was reasonably new but already grubby with the greasy fingerprints of overzealous teenagers. I turned it on and the screen flickered with light. A blinking apparition of a disk appeared alongside a question mark. I remember taking this to mean that it had crashed so I reached back, opened my hand and struck the monitor really, really hard. The next thing I remember was a jolt of shock as the class teacher screamed at me from across the room. I got sent out. Apparently the question mark was normal.

The second and more favourable memory was the first time I saw pictures of the first iPhone on the internet. I remember looking at it and thinking that I was seeing the device that featured in so many of my sci-fi fuelled childhood dreams. A screen that you could hold in your palm. That would show you videos. That would let you communicate to your friends. Find your way around. Somehow Apple had made real something that I could only conjure up in my imagination and it felt magic to be part of the generation that got to see it happen.

Simon:

1994, using an Macintosh II in IT at secondary school. My only other prior exposure to computers had been Apricot and Amstrad (we had a CPC6128 with a colour screen). I was amazed by the tiny size and loading speed. No tapes! It was the first computer I’d ever used with more than one word processing font.

Matthew:

The first Mac I owned was an LC475, and that pizza box unit is still at my mum’s house, the insides chewed away by mice which is what happens if you leave computers hanging around in houses in the countryside. I loved that computer: I made fanzines and I made art. I connected to my first BBS, through a 2400 baud modem I bought from a classifieds ad at the back of a magazine. When I connected, that very first time, when I saw the future and everything changed, pivoted on the spot and pointed towards a much larger very different future, the stereo was playing Pink Floyd’s A Momentary Lapse of Reason. The standout single from that album is called “Learning to Fly.”

The first Mac I saw was at a house of my dad’s friend, a man named Dave, and he had one of those all-in-one Macs, and I was very young. This was a long time before the LC475 that I had, and the strong memory of seeing it was the reason I would, when I was older, get that LC475. I was amazed at three things: the GUI which looked like pen-and-ink draftsmanship; that the power button was on the keyboard instead of being on the back of the box, and that the keyboard was a separate, independent thing; and that there was no computer: there was just the screen, the thing that you used. I couldn’t believe it. I looked at it for a long time.

Jack:

I don’t really care what anyone else than Steve does.

Timo:

‘Bloody Steve’ we used to shout, as Finder windows lost their positions, as CDs were teased with paperclips or the screens of Titanium laptops fell off. Schulze & I have lambasted Jobs over the years for faults in OSes and problems with Apple hardware, but that was before we realised how hard it is to do even basic hardware and software properly at scale. My respect for him grew enormously as Apple moved from computing and swallowed up ever more industries that I cared about. Perhaps the most significant thing I have learnt from Jobs is that his products were absolutely his politics.

My story:

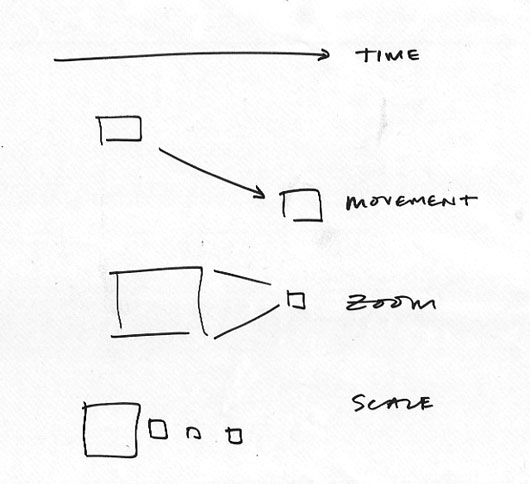

It was 1986, I was 14, and working at Harris Printers in my hometown in South Wales after school everyday and most saturdays. I cleaned, collated, folded, packed print. Sometimes I got to make litho plates on the big Agfa camera, and sometimes I got to use the ancient treadle-powered letterpress. I sometimes got to do layout with Letraset and typesetting galleys from an IBM golfball printer. I convinced the owner that something called DeeTeePee was the next big thing, and we should buy a Mac Se30, Quark Xpress and Adobe Illustrator 1.0. I think with the Radius display and Laserwriter+ it must have come to about £20k or so. It was an incredible machine. What you saw was what you did was what you got. You moved things on a screen that seemed real, not abstractions. My only computer experiences, like most kids till then had been a BBC Model B, or a Vic-20, abstract and arcane. This was something that everyone, in the printers, in my family, my school friends – everyone – could see was different. It was when I first felt I wouldn’t have to choose between technology and art.

Thanks Steve.