As a studio we have recently been quite pre-occupied with two themes. One is new systems of time and place in interactive experiences. The second is with the emerging ecology of new artificial eyes – “The Robot Readable World”. We’re interested in the markings and shapes that attract the attention of computer vision, connected eyes that see differently to us.

We recently met an idea which seems to combine both, and thought we’d talk about it today – as a ‘product sketch’ in video to start a conversation hopefully.

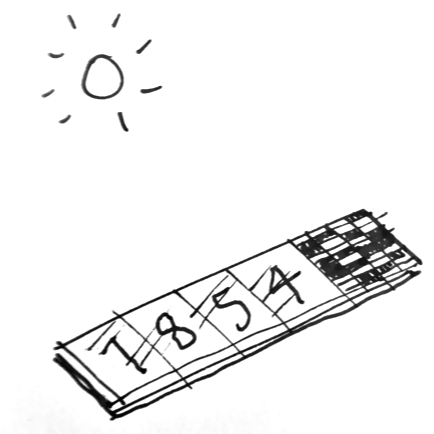

Our “Clock for Robots” is something from this coming robot-readable world. It acts as dynamic signage for computers. It is an object that signal both time and place to artificial eyes.

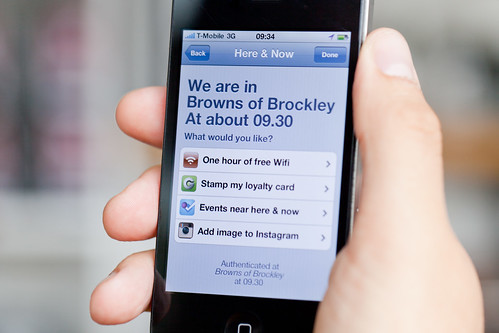

It is a sign in a public space displaying dynamic code that is both here and now. Connected devices in this space are looking for this code, so the space can broker authentication and communication more efficiently.

The difference between fixed signage and changing LED displays is well understood for humans, but hasn’t yet been expressed for computers as far as we know. You might think about those coded digital keyfobs that come with bank accounts, except this is for places, things and smartphones.

Timo says about this:

One of the things I find most interesting about this is how turning a static marking like a QR code into a dynamic piece of information somehow makes it seem more relevant. Less of a visual imposition on the environment and more part of a system. Better embedded in time and space.

In a way, our clock in the cafe is kind of like holding up today’s newspaper in pictures to prove it’s live. It is a very narrow, useful piece of data, which is relevant only because of context.

If you think about RFID technology, proximity is security, and touch is interaction. With our clocks, the line-of-sight is security and ‘seeing’ is the interaction.

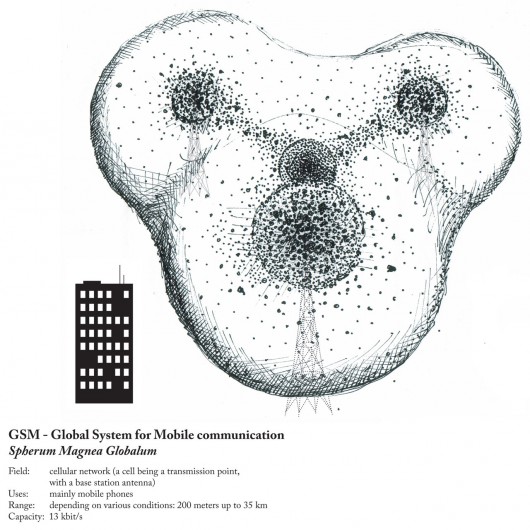

Our mobiles have changed our relationship to time and place. They have radio/GPS/wifi so we always know the time and we are never lost, but it is at wobbly, bubbly, and doesn’t have the same obvious edges we associate with places… it doesn’t happen at human scale.

^ “The bubbles of radio” by Ingeborg Marie Dehs Thomas

Line of sight to our clock now gives us a ‘trusted’ or ‘authenticated’ place. A human-legible sense of place is matched to what the phone ‘sees’. What if digital authentication/trust was achieved through more human scale systems?

Timo again:

In the film there is an app that looks at the world but doesn’t represent itself as a camera (very different from most barcode readers for instance, that are always about looking through the device’s camera). I’d like to see more exploration of computer vision that wasn’t about looking through a camera, but about our devices interpreting the world and relaying that back to us in simple ways.

We’re interested in this for a few different reasons.

Most obviously perhaps because of what it might open up for quick authentication for local services. Anything that might be helped by my phone declaring ‘I am definitely here and now’ e.g., as we’ve said – wifi access in a busy coffee shop, or authentication of coupons or special offers, or foursquare event check-ins.

What if there were tagging bots searching photos for our clocks…

…a bit like the astrometry bot looking for constellations on Flickr?

But, there are lots directions this thinking could be taken in. We’re thinking about it being something of a building block for something bigger.

Spimes are an idea conceived by Bruce Sterling in his book “Shaping Things” where physical things are directly connected to metadata about their use and construction.

We’re curious as to what might happen if you start to use these dynamic signs for computer vision in connection with those ideas. For instance, what if you could make a tiny clock as a cheap solar powered e-ink sticker that you could buy in packs of ten, each with it’s own unique identity, that ticks away constantly. That’s all it does.

This could help make anything a bit more spime-y – a tiny bookmark of where your phone saw this thing in space and time.

Maybe even just out of the corner of it’s eye…

As I said – this is a product sketch – very much a speculation that asks questions rather than a finished, finalised thing.

We wanted to see whether we could make more of a sketch-like model, film it and publish it in a week – and put it on the blog as a stimulus to ourselves and hopefully others.

We’d love to know what thoughts it might spark – please do let us know.

Clocks for Robots has a lot of influences behind it – including but not limited to:

Josh DiMauro’s Paperbits

e.g. http://www.flickr.com/photos/jazzmasterson/3227130466/in/set-72157612986908546

http://metacarpal.net/blog/archives/2006/09/06/data-shadows-phones-labels-thinglinks-cameras-and-stuff/Mike Kuniavsky:

- http://www.orangecone.com/archives/2010/06/smart_things_ch_9.html

- http://www.ugotrade.com/2009/03/18/dematerializing-the-world-shadows-subscriptions-and-things-as-services-talking-with-mike-kuniavsky-at-etech-2009/

Bruce Sterling: Shaping Things

Tom Insam‘s herejustnow.com prototype and Aaron Straup Cope’s http://spacetimeid.appspot.com/, http://www.aaronland.info/weblog/2010/02/04/cheap/#spacetime

We made a quick-and-dirty mockup with a kindle and http://qrtime.com

23 Comments and Trackbacks

1. olishaw said on 22 September 2011...

The video is locked as private

2. atomless said on 22 September 2011...

watch 10:55 into this http://www.youtube.com/watch?v=o03wWtWASW4

3. Timo said on 22 September 2011...

Fixed now! Sorry.

4. tamberg said on 22 September 2011...

Interesting. If you look at Google Goggles or Facebook Face recognition, could it be that soon every photo of every place is good enough to serve as a marker in space (or even time)? I.e. the robot readable world becomes a superset of our world, instead of a subset?

5. Trevor said on 23 September 2011...

For some reason reading this made me start thinking of my mobile device as a kind of animal – a curious plastic clad dog that is sniffing around (including a full checkups of the other dogs in the room) and occasionally alerting me to things of interest based on what it has picked up or relieving itself of data in just the right spot.

If you haven’t seen it this platform/app http://www.neuaer.com/ is doing some interesting things on tagging and creating actions based on immediate surroundings.

6. Brian Suda said on 23 September 2011...

Designing clocks for robots reminds me of communications beyond human’s ability to see it. There have been efforts to create signals in out-of-band wavelengths. The Graffiti for Butterflies project http://dziga.com/graffiti/ used Ultraviolet light to attract butterflies to a food source using a UV image of a large flower.

Have you ever tried to hold your TV remote or any other Infrared light source up to a digital camera lens? Most CCD chips will “see” the light and it will appear purple on the camera’s screen. This is because the camera can see more wavelengths than our eyes. Maybe we can assume robots will see more than us too.

While QR Codes are interesting, they aren’t really human friendly. What if you mixed the two worlds of machine-readable codes and out-of-band communications into one? How about a regular clock that humans can tell the time from, but it is actually a digital screen producing an Infrared QR code for the camera that humans don’t even know exists. There are a few hiccups, firstly how would you know where to point the camera if humans can’t see the Infrared light, but beyond that it would be just like SVK comic book, a parallel time, a parallel clock possibly talking in UTC or “Swatch Internet Time” or UNIX Epoch seconds rather than something designed for humans.

7. Greg Borenstein said on 23 September 2011...

Great piece! In the past I’ve been a QR Code skeptic, but something about this (especially its accidental appearance in the background of photos) made it feel more gentle, less like a billboard blasting across a robot’s vision and screaming for attention and more, is empathetic the wrong word for an inhuman eye?

Something about it also reminded me of this diagram from Dan O’Sullivan and Tom Igoe’s classic Physical Computing book:

http://www.flickr.com/photos/unavoidablegrain/6173573943/in/photostream

That picture is for output: how the computer can communicate to us. It feels like we need another one for input: how the computer can see/here/detect/sense/feel us. What would its exaggerated face look like?

8. Mike Marinos said on 23 September 2011...

Great topic. SVK type overlays immediately came to mind too. Also that the made are not limited to just 5 sensory inputs nor the largely pre defined way these inputs are processed. They could even use the reconstruction of brain activity.

9. John Currie said on 23 September 2011...

I am not really sure what the practical applications for this clock would be, how would it be used? I agree it really is a “cool” looking device but apart from the human readable clock, how would a computer or smart phone use it in real world applications.

10. bobbie said on 23 September 2011...

as an exploration of a concept, I think this totally hits it. But in practice I tend to agree with Brian — can you explain why the robot readable world has to be apparent in this way? Cameras see differently to humans; they can.

At a very simple level, could you not use something that imposes itself less on the environment, or is more sympathetic, than a QR Code? After all, a QR code means only one thing in any spectrum; understanding it is a matter of being able to read the code. Something that exists in different registers — that doesn’t need a rosetta stone to unlock it in order to be useful at all — would make more sense to me. I imagine something much more like the invisible inks of SVK.

Or, have you seen this stealth tank that uses QR codes outside the human visible spectrum?

http://www.newscientist.com/blogs/onepercent/2011/09/stealth-tank-learns-to-transmi.html

I can imagine a robot-readable world as a ghost world in which the machines are able to communicate without ever pushing themselves into our line of sight; a world that only manifests itself to us when we ask it to.

11. bobbie said on 23 September 2011...

oop “cameras see differently to humans; they can see things we can’t. That’s an advantage.”

12. Dan Burzo said on 24 September 2011...

I’m probably misinterpreting or it may be tangential to the conversation, but I wanted to make the connection to Kevin Slavin’s description of Terminator’s Augmented Reality in his talk “Reality is Plenty, Thanks”:

“the world with a bunch of informatics overlaid on it, as if a f** computer needs to read, as if this would be the way for a computer to note information to itself, to write it and then read it”

(video: http://www.mobilemonday.nl/talks/kevin-slavin-reality-is-plenty-thanks/)

Why would robots need to read the time? Cannot they simply be aware of what time it is? (eg. searching in photos for robot-time vs. reading the image metadata, which includes the time)

13. Ishac said on 25 September 2011...

It’s a good a provocation / starting point for discussion around human/robot interpretation of reality. Time and space is used and read differently by human and robots, it’s interesting to combine sources and channels like in this case (visual reading for digital content).

However, as Dan commented, I also asked myself “why?”. If robots need authentication, using a human channel is not the most efficient way. Also all this works if humans or robots trust the source (the information on the clock, in that case). But what does trust mean for a robot? Does trust have shades of grey in the digital world, or is just Yes or No? Probably how much humans trust a digital source is quite different from how a robot trust a human source…

If robots really need authentication from other robots, they should probably use a robot channel of communication. They could just talk ‘robot’ with other robots to confirm they are here, now.

Regarding the camera representation while pointing to the code, I cannot think about a better way to know what the robot (mobile phone) is seeing than showing it to the human, if the human is responsible of finding the code. Visual codes are for humans, or for humans that help robots to point at the code. Maybe we should just let robots find the codes themselves, but then probably they don’t need visual codes anymore if humans are not involved in the action.

14. Chris Johnston said on 5 October 2011...

I still have no damn clue what the point of this clock is. Certainly not to synchronize a robot’s internal clock, which, like everything else these days (computers, GPS receivers, cell phones, etc), would simply receive a signal from the master atomic clock.

15. Sumit Pandey said on 8 October 2011...

The concept of a robot readable world in itself seems pretty fascinating. However I would agree with Dan that a simple overlay of human readable and robot aware data would lead to an obvious “why” situation. Also, technically a lot of data that we consider “human readable” is very easily read and parsed by machines. The real question in that situation would be the area of meaning making and not just literal translations. If objects could read and subsequently react/behave it could lead to a much less scripted daily experience.

It would be brilliant if we could have a symbiotic relationship with machines, helping them make meaning of the world and them helping us uncover/stumble upon a world of machine readable information (AR?). (Although now it sort of sounds like Terminator 2 :))

16. Rakan Albader said on 9 October 2011...

Hi,

This Amazing Clock is it For Sale ???

17. badr m said on 20 October 2011...

I’ve written a smart tutorial on how to build something like that in PHP and a lamp server, to build something like that all you’ll need is a netbook and a screen you are willing to spare follow the instructions and hide it out of site while keeping it connected to a minimal LCD screen (old ones are quite cheap) and viola you’ll have this.

http://wp.me/pIp23-9W

18. Oli said on 22 November 2011...

There’s something remarkably calming about that video.

Q: That sort of display is being used in the clock? It looks remarkably like a kindle one.

19. Productora Audiovisual Starporcasa said on 17 June 2012...

Since we want to congratulate our production company, the watch with qr code is a breakthrough. In our production we bet a lot for this technology, which allows us to update content very quickly and very visible by the number of smartphones that currently exist

20. Ollie said on 10 August 2012...

This tech could be used for by police patrol cars..

All new consumer cars have a ticking QR clock as well as or instead of a reg plate.

Police simply capture the code on the car to give them concrete evidence of the time they saw the vehicle and exact location.

Dunno..i’m new to this tech talk so might have got the wrong end of the stick.

21. Eric Gould said on 14 November 2012...

Hi BERG,

I found your blog post on the QR Clock for Robots. Great article and concept. The video is quite good, too.

Have a terrific week.

Best,

Eric G. \\ Seattle, WA \\ surf2air.com \\ @kinolina \\

Trackback: Relógio possui QR-Code que é atualizado constantemente 7 October 2011

[…] em 07 October 2011. Tags: berg london, qr-code, relogio A mais recente criação do estúdio Berg London é fascinante, eles criaram um relógio padrão digital que ao invés de mostrar um visor numérico […]

Trackback: Learning After Effects | Roowilliams 25 June 2012

[…] with After Effects and, inspired by Matt Jones @ Berg London‘s concept video for Clocks for Robots, the first thing I wanted to try was mapping a shape layer to a surface and have it rotate and […]