You may remember Suwappu, our toy invention project with Dentsu — those woodland creatures that talk to one-another when you watch them through your phone camera. You can see the film – the design concept – here or (and now I’m showing off) in the New York at Moma, in the exhibition Talk to Me.

Here’s the next stage, a sneak peek at the internal app prototype:

Direct link: Suwappu app prototype video, on Vimeo.

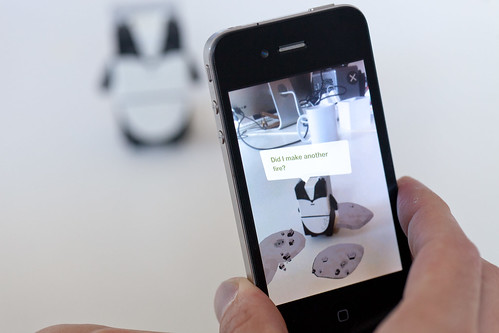

It’s an iPhone app which is a window to Suwappu, where you can see Deer and Badger talk as you play with them.

Behind the scenes, there’s some neat technology here. The camera recognises Deer and Badger just from what they look like — it’s a robot-readable world but there’s not a QR Code in sight. The camera picks up on the designs of the faces of the Suwappu creatures. Technically this is markerless augmented reality — it’s cutting-edge computer vision.

And what’s also neat is that the augmented reality is all in 3D: you suddenly see Deer as inside a new environment, one that moves around and behind the toy as your move the phone around. It’s all tabletop too, which is nicely personal. The tabletop is a fascinating place for user interfaces, alongside the room-side interfaces of Xbox Kinects and Nintendo Wiis, the intimate scale of mobiles, and the close desktop of the PC. Tabletop augmented reality is play-scale!

But what tickles us all most about Suwappu is the story-telling.

Seeing the two characters chatting, and referencing a just-out-of-camera event, is so provocative. It makes me wonder what could be done with this story-telling. Could there be a new story every week, some kind of drama occurring between the toys? Or maybe Badger gets to know you, and you interact on Facebook too. How about one day Deer mentions a new character, and a couple of weeks later you see it pop up on TV or in the shops.

The system that it would all require is intriguing: what does a script look like, when you’re authoring a story for five or six woodland creatures, and one or two human kids who are part of the action? How do we deliver the story to the phone? What stories work best? This app scratches the surface of that, and I know these are the avenues the folks at Dentsu are looking forward to exploring in the future. It feels like inventing a new media channel.

Suwappu is magical because it’s so alive, and it fizzes with promise. Back in the 1980s, I played with Transformers toys, and in my imagination I thought about the stories in the Transformers TV cartoon. And when I watched the cartoon, I was all the more engaged for having had the actual Transformers toys in my hands. With Suwappu, the stories and the toys are happening in the same place at the same time, right in my hands and right in-front of you.

Here are some more pics.

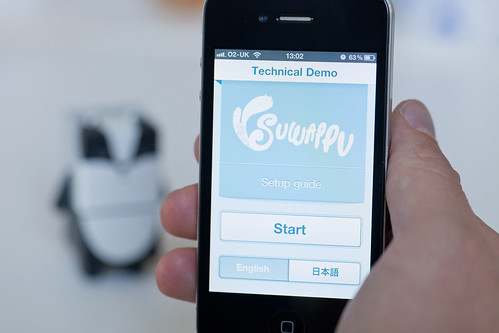

The app icon.

Starting the tech demo. You can switch between English and Japanese.

Badger saying “Did I make another fire?” (Badger has poor control over his laser eyes!)

Deer retweeting Badger, and adding “Oh dear.” I love the gentle way the characters interact.

You can’t download the iPhone app — this is an internal-only prototype for Dentsu to test the experience and test the technology. We’re grateful to them for being so open, and for creating and sharing Suwappu.

Thanks to all our friends at Dentsu (the original introduction has detailed credits), the team here at BERG, and thanks especially to Zappar, whose technology and smarts in augmented reality and computer vision has brought Suwappu to life.

Read more about the Suwappu app prototype on Dentsu London’s blog, which also discusses some future commercial directions for Suwappu.

And, finally, a small piece of gaming nostalgia that made me smile:

And, finally, a small piece of gaming nostalgia that made me smile: