I saw this picture via The Online Photographer a few days ago. It’s a Leica M4, being sold second-hand right now on eBay, for the premium prices such cameras command.

I loved the wear at the edges, where the black paint has been worn away to reveal the brass underneath. It’s not broken; it hasn’t been mistreated. It’s just been well-used in its 35-year-odd lifespan.

And, in some ways, it’s more attractive for its wear. This isn’t a camera that’s been locked away in its packaging by an over-protective collector; it’s been well-used for its intended purpose. Part of the attraction to such an object isn’t just the aesthetic quality of its patina: there’s also something attractive about the action that wear represents. As a photographer, I’m attracted to this wear because in some ways, it represents the act of photography.

I’m not sure I’m explaining this well. Here’s another example.

I was looking through my links for other articles about wear and patina, and I found this Reuters photograph from last year. It’s of the floor of a Tibetan monastery, where, over twenty years of daily prayer, Hua Chi has worn his own footprints into the floor.

He has knelt in prayer so many times that his footprints remain deeply, perfectly ingrained on the temple’s wooden floor.

Every day before sunrise, he arrives at the temple steps, places his feet in his footprints and bends down to pray a few thousand times before walking around the temple.

The footprints are three centimeters (1.2 inches) deep where the balls of his feet have pressed into the wood.

1.2 inches of prayer. There’s something beautiful about the smooth imprints of a human foot worn into wood. But the wear itself also comes to symbolise the action that led to it: in this case, Hua Chi’s prayers.

Patina is the effect of actions made solid; photography into worn paint, prayer into a worn floor. It is verbing turned into a noun.

Shared Lives

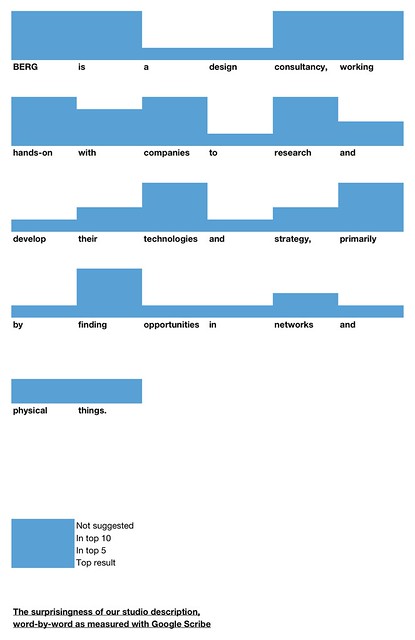

Nouns and verbs. That reminded me of this post about “The Life Of Products” by Matt W, from nearly four years ago. Matt wrote:

Products are not nouns but verbs. A product designed as a noun will sit passively in a home, an office, or pocket. It will likely have a focus on aesthetics, and a list of functions clearly bulleted in the manual… but that’s it.

Products can be verbs instead, things which are happening, that we live alongside. We cross paths with our products when we first spy them across a crowded shop floor, or unbox them, or show a friend how to do something with them. We inhabit our world of activities and social groups together… a product designed with this in mind can look very different.

Wear is, of course, both a noun and a verb. It’s the verb that inevitably happens through use, and it’s the noun that the verb leaves behind. Patina is the history of a product written into its skin.

And, of course, it takes time for wear to occur. Objects start their lives pure, unworn, ready to be both used and shaped by that use. In Products are People Too, Matt’s 2007 talk from Reboot 9, he said:

Products exist over time. We meet them, we hang out with them, we live life together.

Patina is a sign of a life shared.

Here’s a life I’ve shared.

This is my three-and-a-half year old laptop. It’s my second aluminium Mac, and, just as with my previous laptop, the surface has tarnished right underneath where my palms rest. It’s not a fault – that black speckling is just what happens when perispiration meets aluminum. It’s not as beautiful as the Leica, or the monastery floor – but it’s not as ugly as cracked and chipped plastic.

I think that might be one reason I’ve kept it quite so long: the material and form of the exterior have encouraged me to hold onto the laptop. Certainly much longer than if it had been poorly constructed, becoming damaged rather than worn.

In his talk at Frontiers of Interaction in 2009, Matt J showed this photograph of Howies’ “Hand-Me-Down jacket”.

It’s a jacket that’s designed to last. Howies ensure they have the materials to repair it, encouraging the owner to mend the jacket rather than throw it out. Inside the jacket is the label above: name tags to last several generations, indicating periods of ownership.

The label is surprising because it serves as a reminder that the product will last. The encouragement to pass something on, and to measure ownership in years, acts as a reminder that there’s no reason to throw the jacket out.

It seems absurd to have to be reminded of that.

But: how many essentially functional pieces of clothing have you or I thrown out? How many items that could be repaired have ended up in the bin? How many objects have never had the time to acquire a patina – thrown out before their time was truly up?

It’s sad that we have to be reminded that objects can last. I cannot deny that there’s a role for inexpensive, cheaply-manufactured, and somewhat disposable products – but they shouldn’t condition us into thinking that’s how all products are.

Designing things that want to be kept

I read an article – which, alas, I can’t find a link to at the moment – about the disposal and lifespan of mobile phones in the USA. The most shocking item in it was that, when questioned as to the lifespan of a mobile phone, most Americans responded with “about 24 months”. A mobile phone may not last like a Leica or a Stradivarius… but it’ll last a good bit longer than two years before it’s beyond use.

24 months was, of course, the length of common cellphone contracts. And so, as contracts expired, and network providers told their customers they were eligible for a new phone, they began to assume there had to be something wrong with the old phone. And it would go in the bin.

When the patina an object gains is attractive, it acts as an encouragement to keep it. Good jeans really come into their own as they wear down and develop creases, rips, rough patches. It’s why my favourite pair say something along the lines of “wash me as little as possible!” inside.

It’s important to note: the wear I’m discussing isn’t related to things breaking. Things break because they’re worn out, or poorly designed, or used inappropriately. Patina is that wear which comes from entirely “correct” usage of a product. That usage might be intense – a professional guitarist’s instrument will acquire patina far faster than mine will – but it is, nontheless, the intended usage of the object.

I’m not sure patina can be designed. After all, it’s a product of the relationship between product and owner.

The form it takes can be shaped – by the materials used in a product, by the nature and frequency of operations that an owner might perform. I suppose that a product can be designed to age gracefully, to wear attractively; it’s just the exact nature of that wear that’s out of a designer’s hands.

In considering the patina a product might develop, you of course have to ask a series of interesting questions: about longevity, about sustainability, about materials, about manufacturing. Going beyond “peak X” and towards “resilient X”, as Matt J said. But I think the most interesting questions – at the very heart of that consideration – are emotional ones. “What if someone adores your product? What if someone really does want to make a product a part of their life? What will your product look like when it’s been worn into the ground by virtue of its own success?”

I don’t think there are single answers to those questions, but they’re great questions to have to consider.

(One answer, which leaps to mind for me, can be found in The Velveteen Rabbit – one of those children’s books that manages to be, of course, both profoundly sad and yet uplifting with it. The toy rabbit in question discovers that if his owner loves him enough, he becomes real. Products are people, too, right there in 1920s children’s books).

Another Leica M4 to end with: this one belonging to the photographer Jim Marshall, noted for his music photography since the 60s. (If you don’t know the name, you’ll almost certainly know his work).

Marshall made so many striking images with this camera and others like it, and, in that making, gave it its unique patina. It’s a camera as rock’n’roll as the subjects it shot. Somewhere in that wear – buried in the scuff-marks, the scratches, the flaked paint – are Jimi Hendrix, John Coltrane, Johnny Cash at Folsom Prison: the “life lived together” of Marshall and his camera.