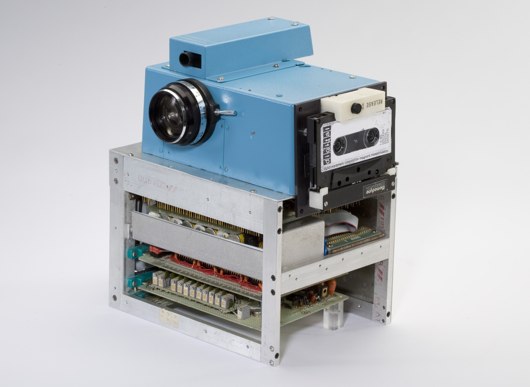

Matt J provided this image of Kodak’s first digital camera, from 1975, and the accompanying story:

It was a camera that didn’t use any film to capture still images – a camera that would capture images using a CCD imager and digitize the captured scene and store the digital info on a standard cassette. It took 23 seconds to record the digitized image to the cassette. The image was viewed by removing the cassette from the camera and placing it in a custom playback device. This playback device incorporated a cassette reader and a specially built frame store. This custom frame store received the data from the tape, interpolated the 100 captured lines to 400 lines, and generated a standard NTSC video signal, which was then sent to a television set.

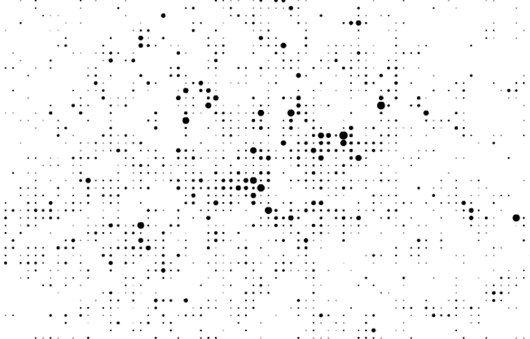

Matt B sent us Anil Bawa-Cavia’s visualisations of Foursquare check-in data for London, Paris and New York. The striking maps (an excerpt of which is displayed above) start by displaying activity across a uniform grid:

In these maps, activity on the Foursquare network is aggregated onto a grid of ‘walkable’ cells (each one 400×400 meters in size) represented by dots. The size of each dot corresponds to the level of activity in that cell. By this process we can see social centers emerge in each city.

There’s more at the link above, and also in Anil’s explanation of the techniques used – where he also provides a dump of all the data.

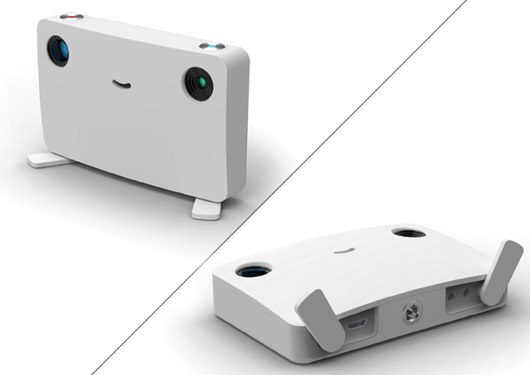

Matt W found this lovely design for a digital camera with built-in pico projector. Of course the two lenses are eyes. And everything else stems from there.

Nick pointed out that Epic Win is now on sale. It’s a playful to-do list that turns doing tasks into experience points for an avatar, much as Chore Wars before it. What sets it out for me is just how much value there is in making a functional piece of software – in this case, a to-do list – well-designed and beautiful. It’s fun to use, without getting in the way of the basic task of making lists, and I want to go back to it. It’s worth playing with just for the consistency of its visual design.

Finally, I really liked David Arenou’s “Immersive Rail Shooter”. In it, he takes the standard video-game lightgun game and adds the ability to use the environment for cover, by placing AR tags around a room for the console’s camera to detect. From his site about the project, it appears to be a very much working prototype (as opposed to proof-of-concept video).

What’s really fun for me is that although it uses markers and computer vision to detect the player’s location, the “augmenting” of reality is done not through a camera and a screen – but by changing of the room the player interacts with. All of a sudden, the chair in the real-world becomes cover in the game-world, and so you end up ducking and diving around the living room. No glasses, no holding a mobile phone in front of your face, but the boundary between the game and reality has very definitely been blurred.

No Comments or Trackbacks